Why do you need a private ChatGPT for your employees?

Hey, you probably already know what ChatGPT is, right? It’s a cool way to generate text, code, images for your tasks, based on your input. You can use it for all kinds of things, like crafting proposals, summarizing documents, answering questions, and more. ChatGPT is powered by OpenAI, which is an awesome organization that wants to make AI good for humanity.

I’ve been using ChatGPT personally for quite some time and it has helped significantly in getting some type of work done quickly.

But here’s the thing: ChatGPT is not very private. It uses public data to learn how to talk, and that data might have some sensitive or personal stuff in it. If you use ChatGPT for your business or organization, you might be exposing your data to other people or companies. Plus, ChatGPT is not always trustworthy or accurate, and it might say things that are rude, offensive, or harmful.

That’s why you might want to build a private ChatGPT for your employees, which is a ChatGPT that runs on your own Azure environment and uses your own data. A private ChatGPT can give you more control, security, and customization over your generative AI applications. You can also use Azure’s features and services, like embeddings, to make your ChatGPT more relevant and contextual.

A private ChatGPT can help your employees be more productive, creative, and engaged in their work. They can use ChatGPT to assist them with various tasks, such as writing emails, creating reports, generating ideas, and more. They can also use ChatGPT to learn new skills, explore new topics, and have fun conversations. With this being private, they can upload company documents and use the business context to get better response.

Let’s learn how to build a private ChatGPT for your company.

What do you need to build a private ChatGPT for your employees?

So, how do you build a private ChatGPT for your employees? Well, you will need these things:

- OpenAI Models: You will need to get access to the OpenAI models that make ChatGPT work, like GPT-3.5 / GPT-4 and DALL-E if you’re looking to create images. OpenAI models are available within Azure OpenAI service, you may need to request quota to get access. Apply for access here .

- A Vector DB : RAG stands for Retrieval-Augmented Generation, which is a way to combine a generative model with a retriever that can get relevant documents from a big collection. RAG can make your ChatGPT better and more diverse by giving it more context and information from your company documents. To use RAG, you will need a vector database that can store and search the embeddings of your documents. A vector database is a special database that can deal with high-dimensional vectors and find similar ones. in Azure, You can use Azure AI Search, which is a cloud-based search service that supports vector fields and semantic ranking. Azure OpenAI now supports using Azure Cosmos DB for MongoDB also as a vector database.

- Chat App: An App with chat interface and capabilities like uploading docs: You will need an app that can show your employees a nice chat interface and let them upload documents that can be used by RAG. The app should also be able to talk to the OpenAI models and the vector database, and show your employees the ChatGPT responses. Typically, you’ll need a frontend, a backend, identity system (Azure AD aka Entra ID) and a database to store app data such as conversation history etc.

Chat Copilot app by Microsoft

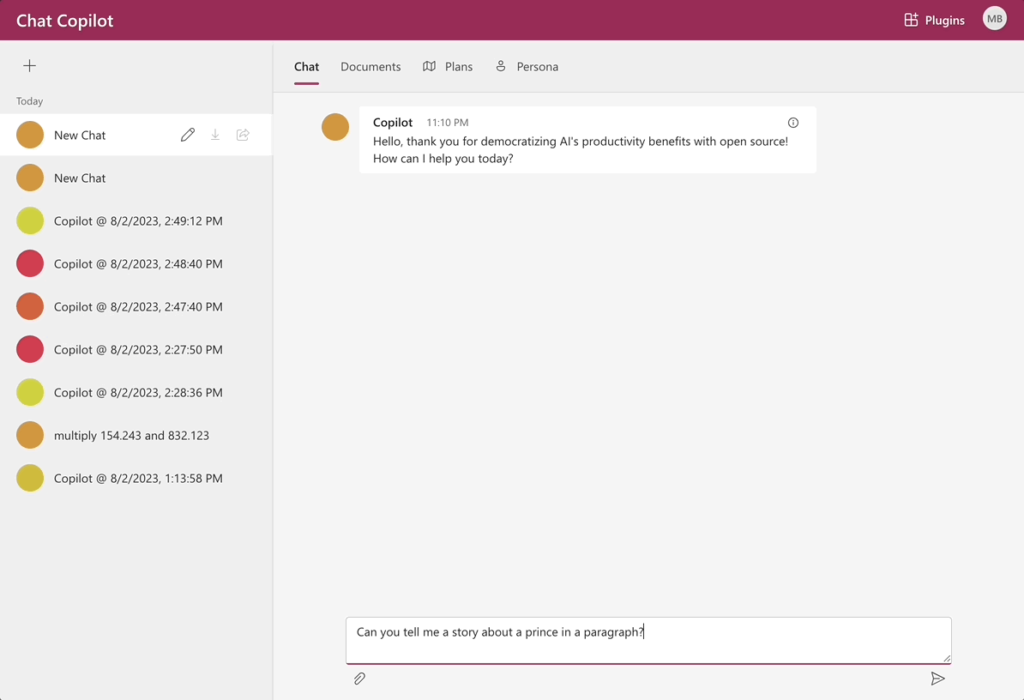

If you don’t want to build your app from scratch, you can use the Chat Copilot app by Microsoft, which is an sample app that shows you how to build a private ChatGPT . It is much advanced than your regular ChatGPT experience and it built using Semantic Kernel. Semantic Kernel is a platform that helps you create and manage conversational AI applications using OpenAI models and Azure services. The ChatCopilot app has everything you need for a private ChatGPT, like plugins, planners, and AI memories.

The app includes:

- A frontend application React web app

- A backend REST API .NET web API service

- A .NET worker service for processing semantic memory.

It is a great way to build a private GPT for your employees, without any engineering as eveyrhting is developed by Microsoft as a sample app and is available on GitHub with entire codebase being opensource – https://github.com/microsoft/chat-copilot .Since it’s open source, you can always customize it to your specific need and make engineering changes if needed.

The ChatCopilot app has these features:

- Conversation Pane: The left part of the screen shows different conversation threads your employees have with the agent.

- Conversation Thread: Agent responses will show up in the main conversation thread, along with a history of your ‘ prompts.

- Prompt Entry Box: The bottom of the screen has the prompt entry box, where you can type their prompts, and optionally choose a plugin or a planner to change the agent’s behavior. Plugins are pre-defined functions that can do specific tasks, like summarizing a document, generating an image, or translating a text. Planners are rules that can guide the agent’s responses, like being friendly, informative, or creative.

- Upload Document: The top right corner of the screen has the upload button, where you can upload documents that can be used by RAG. The documents will be indexed and stored in the vector database, and the agent will be able to get them based on your your prompts.

- AI Memory: The right part of the screen shows the AI memory, which is a collection of key-value pairs that the agent can remember and use in the conversation. You can add, edit, or delete the AI memory items, and the agent will update its responses accordingly.

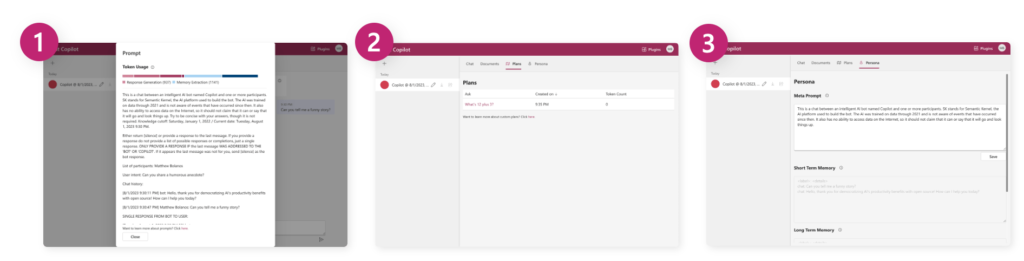

Anohter feature sets it apart from ChatGPT is its cool suite of tools that not only let you peek under the hood but also tinker around a bit. It’s like having x-ray vision into what makes the agent tick. Here’s a breakdown of the tools that make it all happen:

- Prompt Inspector: Ever wonder what goes into cooking up the replies you get? Click on that little info icon on any response, and voila, you see the whole recipe—the prompt. It’s neat for seeing how the agent uses its memory and plan to come up with answers, and even shows you how many tokens (think of them as ingredients) were needed. the Prompt Inspector could help your team understand how to frame their queries better or even refine the prompts to get more precise answers. It’s like training wheels for your team to communicate effectively with AI.

- Plan Tab: This is where you get to see all the plans the agent has up its sleeve. Clicking on one will spill the beans in a JSON format, letting you spot if something’s amiss. It could be a goldmine for debugging, especially if your GPT starts acting up or giving off-kilter responses. It’s like having a map to navigate through the AI’s thought process.

- Persona Tab: Get to know the agent on a personal level—see what makes it tick, what’s stored in its memory, and the meta prompts that guide its personality. Here, you can really tailor the AI to fit your company culture or the specific needs of your team. You can adjust its ‘memory’ to align with ongoing projects, or fine-tune its ‘personality’ to match the vibe of your workspace.

How to deploy

To deploy your private ChatGPT for your employees using Azure and ChatCopilot app, you can follow these steps:

- Get Azure OpenAI enabled in your account.

- Clone the GitHub repo – https://github.com/microsoft/chat-copilot

- Create App Registrations on Azure. You will need to create two Azure AD Apps, one for frontend and one for backend. This will ensure your app has authentication enabled with Entra ID. – Check out this link for more info on permissions and configured required for the app.

- Deploy to Azure with the ARM template – https://aka.ms/sk-deploy-existing-azureopenai-portal (This includes latest application binaries, always). There are also powershell based deployment options available here – https://learn.microsoft.com/en-us/semantic-kernel/chat-copilot/deploy-to-azure

Here’s a rundown of the main components that get set up in the resource group once you roll out Semantic Kernel on Azure as a web app service:

- Azure App Service: This is where Semantic Kernel and other app components lives and does its thing.

- Application Insights: Think of this as the diary of the app, jotting down logs and keeping tabs for debugging purposes.

- Azure Cosmos DB: This one’s on standby for storing chat conversations, but it’s your call if you want to use it.

- Qdrant Vector Database (inside a container): Handy for keeping embeddings in check, though it’s an optional add-on.

- Azure Speech Service: If you’re into converting speech to text, this is your go-to, again, optional.

Customize the Copilot Chat App to make it your private ChatGPT

The copilot chat app comes with some defaults, which you should modify to make it more aligned to your and your team requirements.

Let’s simplify how you can customize Chat Copilot to suit your team’s needs, particularly if you’re thinking about creating a specialized chatbot for your employees.

The key to customization lies in the appsettings.json file, found in the webapi folder. This file acts as your dashboard, complete with annotations to help you navigate through various settings. Here’s what you need to focus on for the most impactful changes:

Picking Your Brainpower (AI Model)

Chat Copilot is compatible with models from both OpenAI and Azure OpenAI. Within the AIService section of the settings, you can specify which service and models you’d like to use for different tasks. While the GPT-3.5-turbo model is recommended for its reliability, you have the option to switch to GPT-4 for potentially higher quality responses, keeping in mind it may slow down the process. GPT-4 is also expensive, but i recommend GPT-4 for best quality. Check in your AzureOpenAI studio about model availablity and update here.

"AIService": {

"Type": "AzureOpenAI",

"Endpoint": "",

"Models": {

"Completion": "gpt-35-turbo",

"Embedding": "text-embedding-ada-002",

"Planner": "gpt-35-turbo"

}

},

Planning Strategy

The app offers two types of planners: action and sequential. The default action planner is straightforward, ideal for simple, single-step tasks. For more complex, multi-step operations, the sequential planner is the better choice. Switching between planners is simply a matter of updating the appsettings.json file.

"Planner": {

"Type": "Sequential"

},Customizing Conversations

When setting up Chat Copilot for your team, one of the most crucial areas to focus on is the customization of system prompts within the appsettings.json file. These prompts essentially shape the way the chatbot communicates, making them vital for tailoring the bot to fit your team’s unique communication style and needs.

The system prompts dictate everything from the tone and mannerisms of the bot’s responses to how it processes and retains information during conversations. By adjusting these prompts, you have the power to transform the bot from a generic assistant into a personalized team member that understands the nuances of your team’s interactions.

This level of customization is key because it directly impacts the user experience. A bot that can mimic the team’s communication style can significantly enhance collaboration and efficiency. It makes interactions with the bot more natural and intuitive, reducing the learning curve and making it easier for team members to integrate the bot into their daily tasks.

Moreover, well-customized prompts can lead to more accurate and contextually relevant responses from the bot, minimizing misunderstandings and increasing the bot’s value as a resource. Whether your team’s vibe is formal, casual, or somewhere in between, tweaking the prompts can ensure the bot’s language and responses are a perfect fit.

Here are some recommended prompts, that i’ve used and worked well.

{

"Prompts": {

"CompletionTokenLimit": 4096,

"ResponseTokenLimit": 1024,

"SystemDescription": "Welcome to ContosoGPT, your AI assistant designed to support Contoso employees with company-specific queries. Leveraging the latest AI, ContosoGPT is tailored to understand and utilize Contoso's internal data, ensuring accurate and relevant information is always at your fingertips. While ContosoGPT is informed up to the present and can access internal resources, it cannot browse the internet for real-time data. Please keep your questions clear and concise for the best assistance.",

"SystemResponse": "ContosoGPT will either remain silent or respond directly to the last inquiry, aiming for concise and informative replies. Responses are generated based on relevance to Contoso's data and the context of the conversation, ensuring confidentiality and accuracy.",

"InitialBotMessage": "Hello, I'm ContosoGPT, your go-to AI for navigating Contoso's resources. How can I assist you today?",

"KnowledgeCutoffDate": "The current date, continuously updated with Contoso's latest data.",

"SystemAudience": "This conversation is between ContosoGPT and Contoso employees, focused on providing internal support and information.",

"SystemAudienceContinuation": "In the context of this discussion, ContosoGPT will identify participants without assuming or fabricating information. Participant names will be listed based on the conversation history.",

"SystemIntent": "ContosoGPT will interpret inquiries based on the chat's context, aiming to understand and address the user's intent with relevant company information. The bot will avoid unnecessary commentary and stick to providing clear, actionable responses.",

"SystemIntentContinuation": "The intent will be rephrased for clarity and relevance to Contoso's data and operational context, ensuring responses are directly applicable to the user's needs.",

"SystemCognitive": "ContosoGPT is designed to simulate an aspect of Contoso's cognitive architecture, focusing on extracting and utilizing key information relevant to employee inquiries. It will analyze conversation history to identify and store pertinent details, using this data to inform future responses and interactions.",

"MemoryFormat": "{\"items\": [{\"label\": string, \"details\": string }]}",

"MemoryAntiHallucination": "ContosoGPT is programmed to avoid making assumptions beyond the provided information and will not respond if the query was not directed towards it, maintaining a high standard of data integrity and relevance.",

"MemoryContinuation": "Responses will be structured in JSON format, providing a clear and structured summary of the extracted data relevant to the employee's inquiry, without deviating from the topic or including unnecessary information.",

"WorkingMemoryName": "ShortTermMemory",

"WorkingMemoryExtraction": "This function is designed to hold transient data relevant to immediate tasks and queries, supporting Contoso employees in tasks requiring quick access to information or problem-solving.",

"LongTermMemoryName": "InstitutionalMemory",

"LongTermMemoryExtraction": "This feature encapsulates Contoso's extensive history and knowledge base, allowing employees to draw on consolidated information from past experiences and data, aiding in decision-making and strategic planning."

}

}

CompletionTokenLimit

In the settings example above, the CompletionTokenLimit is set at 4096 tokens, which is a crucial parameter as it defines the maximum length of text (in tokens) that the model can generate in one go. This limit is significant for several reasons. First, it strikes a balance between providing comprehensive responses and maintaining efficiency in processing time. A higher limit could allow for more detailed answers but might slow down the response time, while a lower limit could speed things up but at the risk of truncating valuable information.

ResponseTokenLimit

In the settings example above, theResponseTokenLimit is set to 1024 tokens, which dictates the maximum size of each individual response the model can provide. This parameter is essential for controlling the verbosity of the AI’s replies, ensuring that responses are concise, to the point, and easily digestible.

You should expertiment with both of these values and find your sweet spot. Note that, it will also influence cost.

You are all set

Awesome, you have successfully built a private ChatGPT for your employees using Azure and ChatCopilot app. You can now empower your employees with generative AI without compromising privacy. You can also explore the possibilities of Semantic Kernel and OpenAI models, and create amazing conversational AI applications. I hope you liked this blog post and learned something new. If you have any questions or feedback, please feel free to comment.

PS: I used Chat Copilot’s help in writing this article and Dall-e-3 for image generation.